Research experiments: adversarial examples based on painted faces - 2

30 June 2020

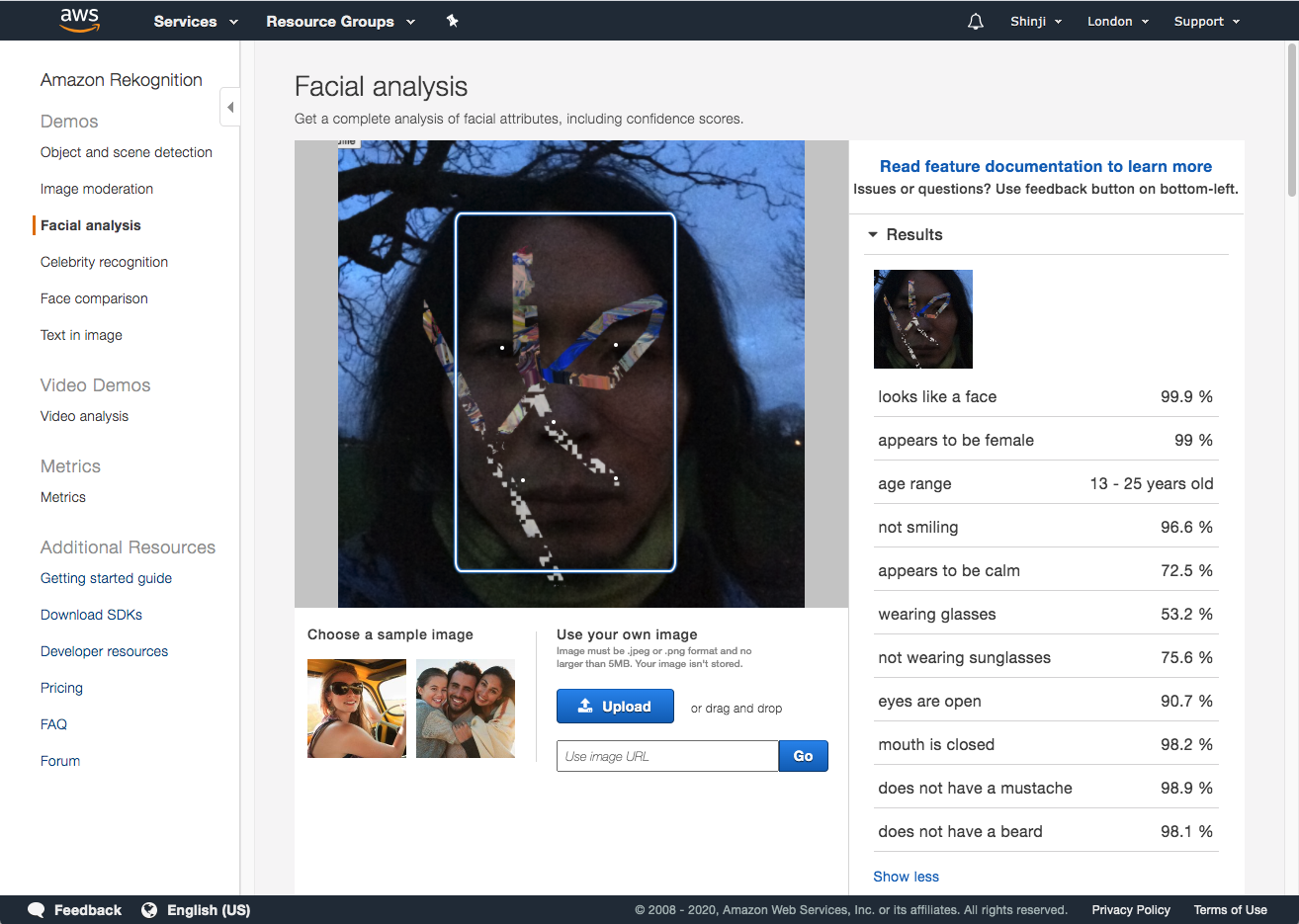

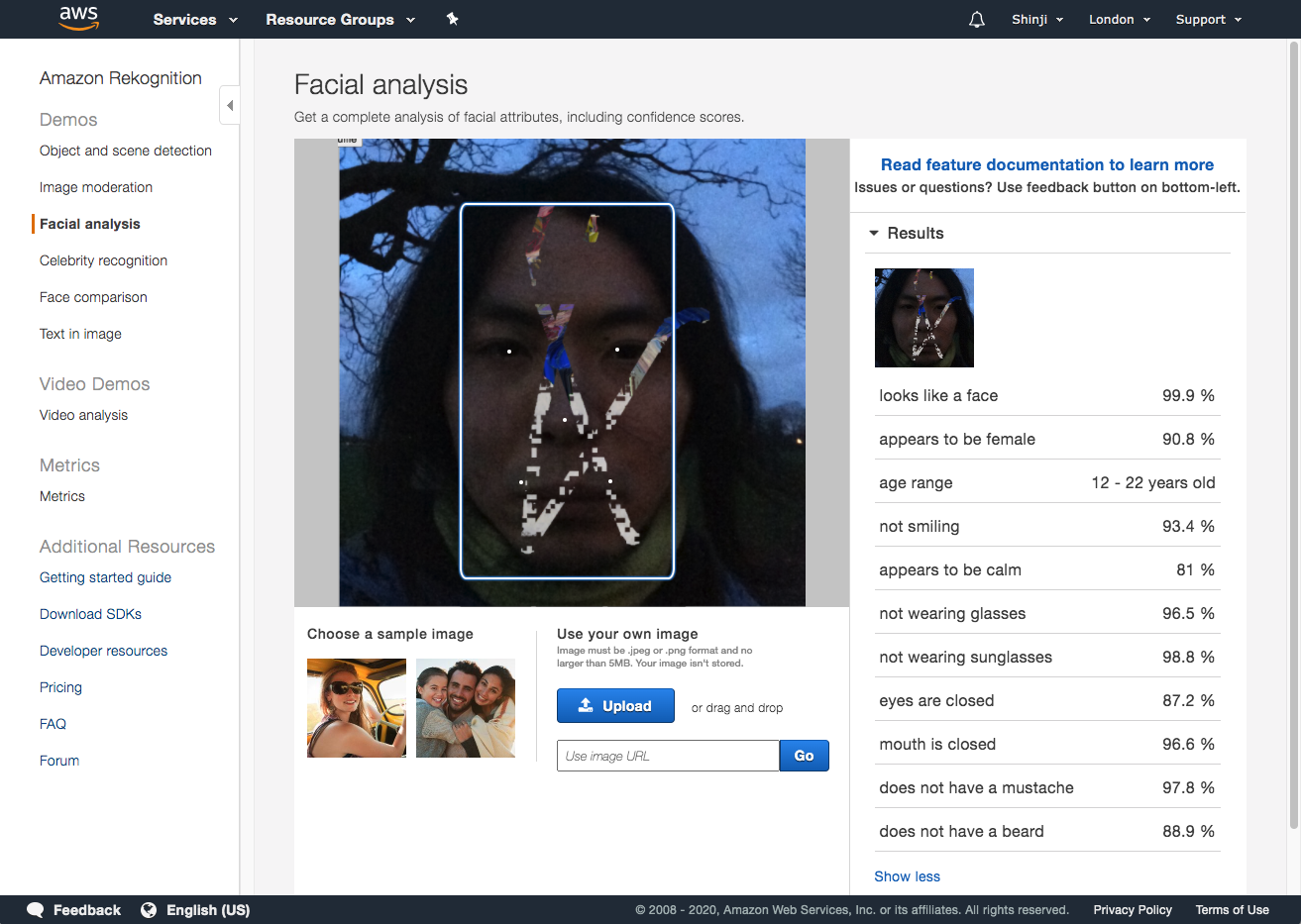

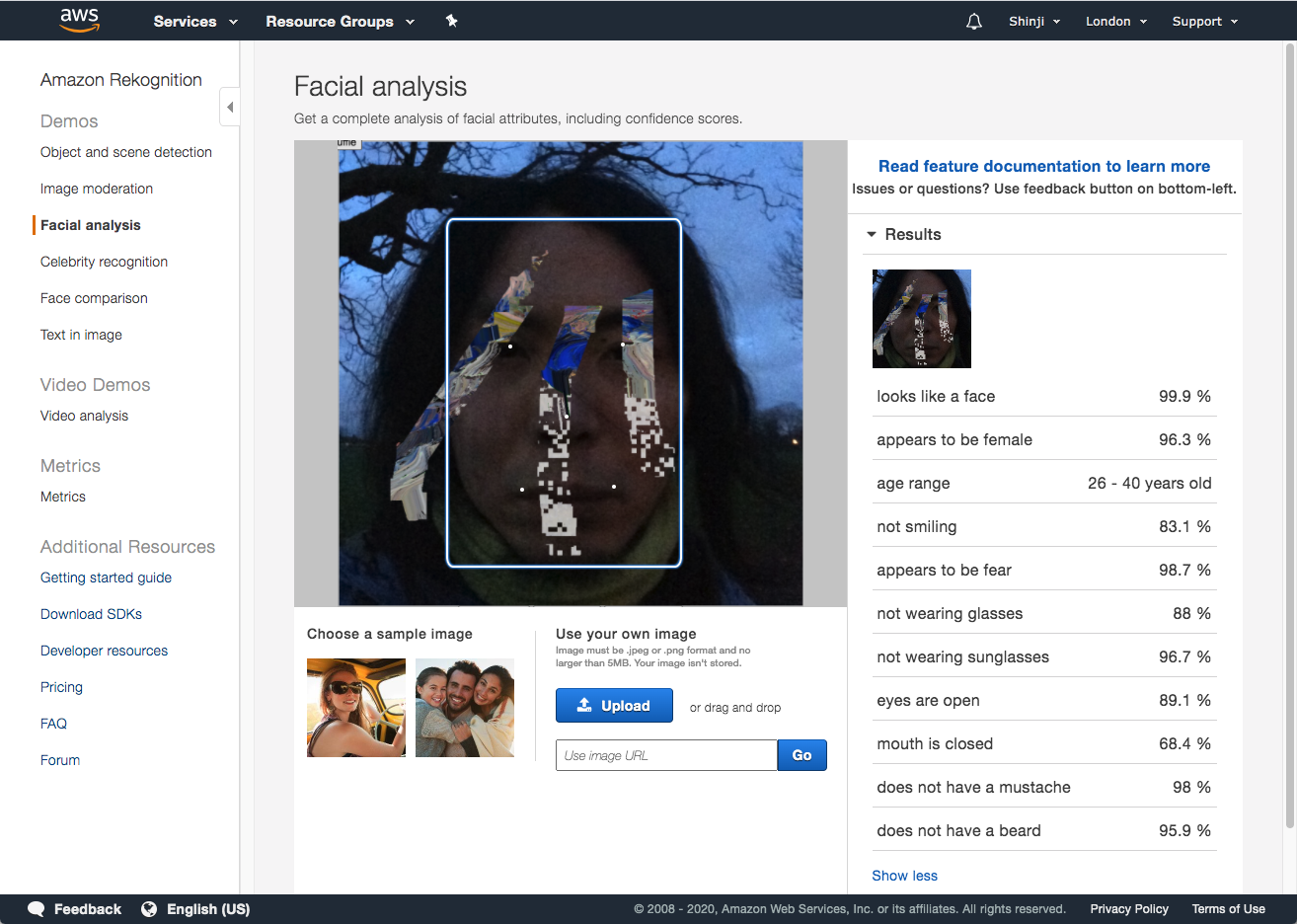

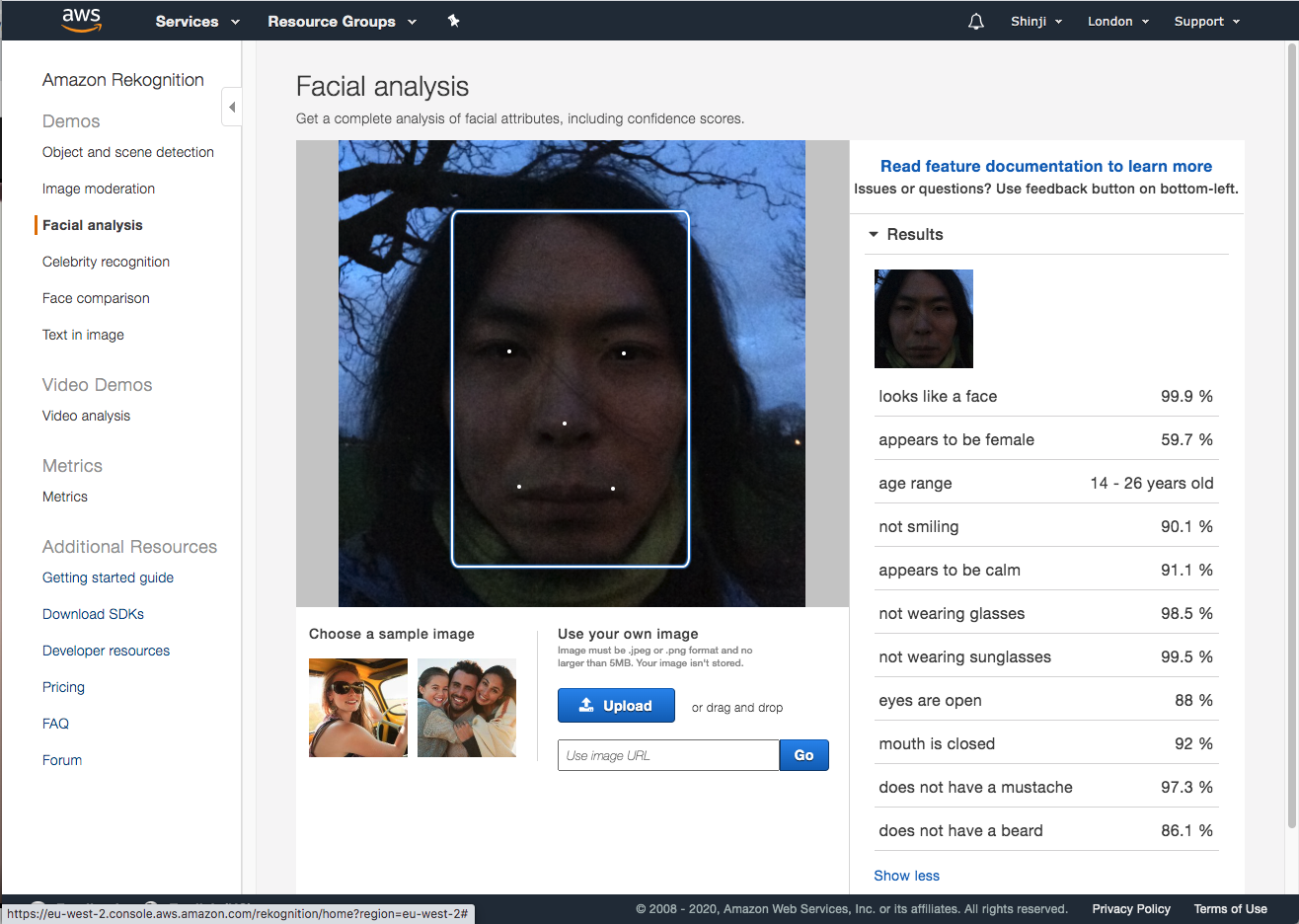

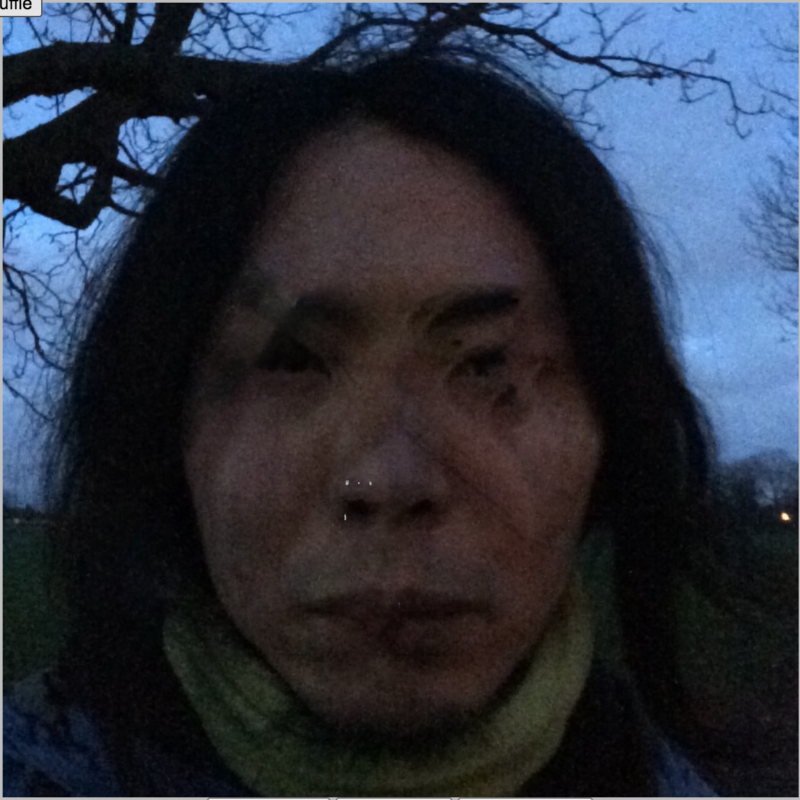

↓ Some adversarial examples of the painted faces produced through the painter, and they are face detection proof against this model. Although these faces are detected by Amazon Rekognition, yet the results of their Facial analysis appear perturbed comparing to the original:

↑ The last one is the original.

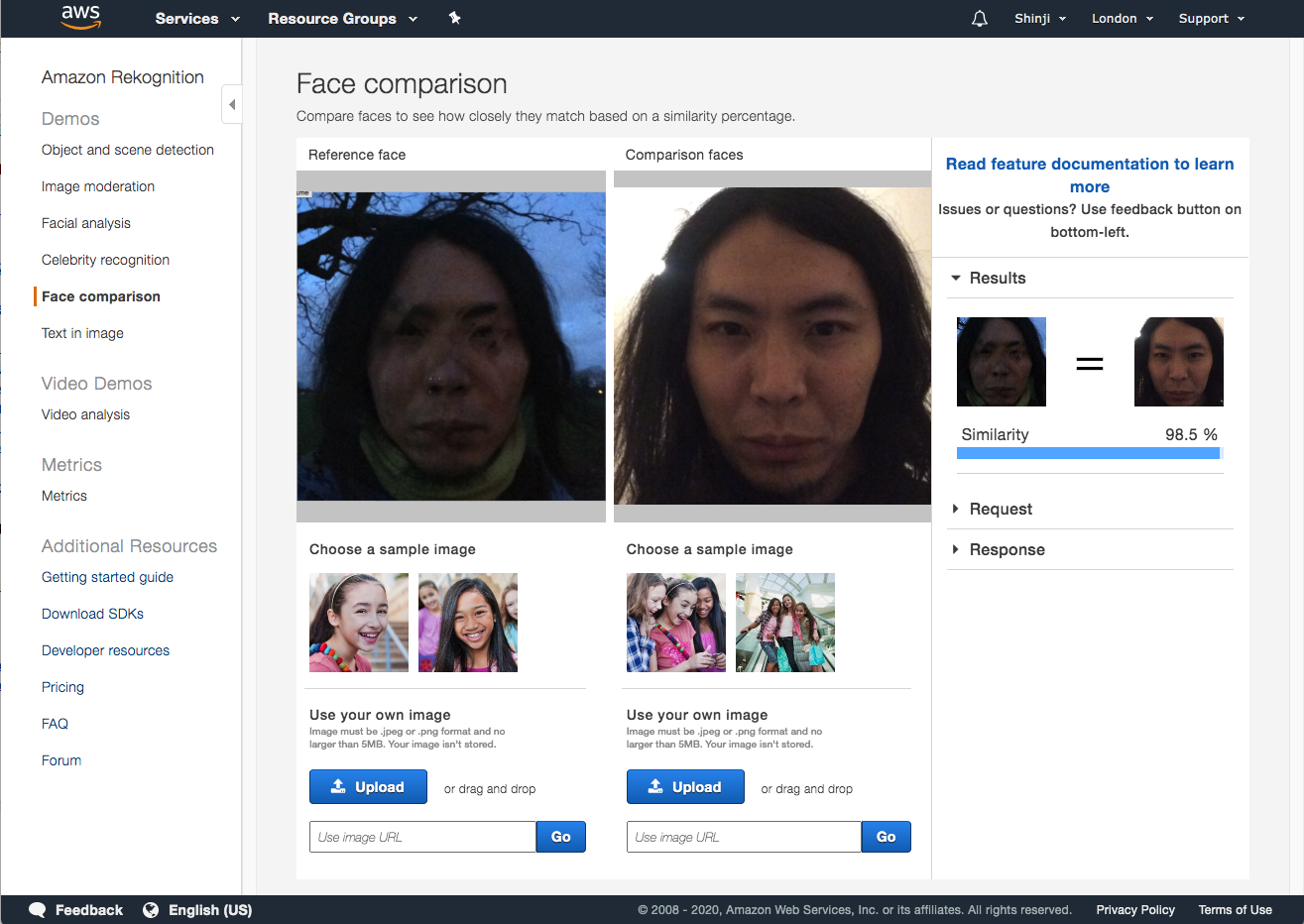

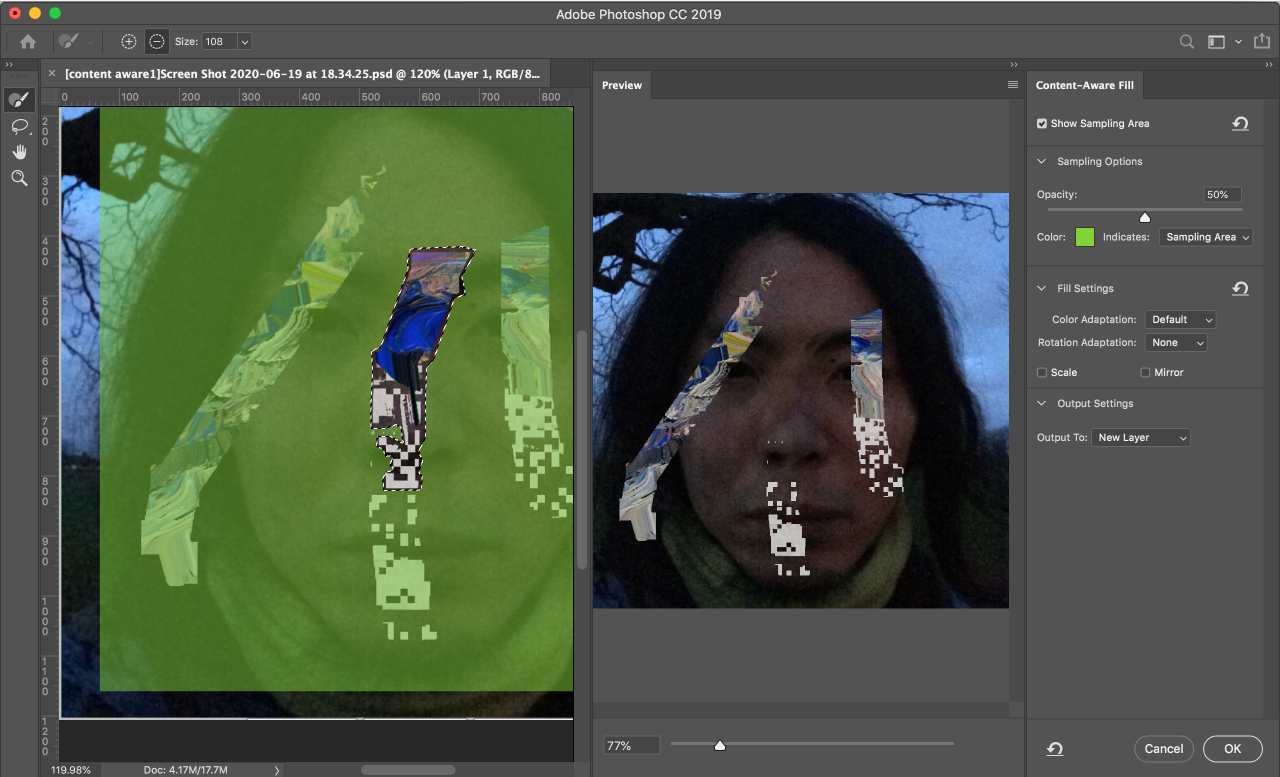

↓ Yet the patterns of the paint applied on the above examples are not complex enough that they could be reversed with content-aware of Photoshop:

↑ This is the reversed face.

↑ Now it appears I'm identified with the reversed portrait.

In terms of ruining the biometric data, the above is not a fool-proof strategy, but unless the data scrapers invent and use a fully automated content-aware or the like, even the above adversarial examples could render the faces useless if it's applied to numerous images to be scraped. Many samples are needed for (re)training facial recognition, so if many of the scraped images for a dataset have been tampered, it can render very inefficient the task of removing the paint of numerous images.

Also, if people want to avoid being id'ed by facial recognition they can always try to paint more so that the facial data will be more enmeshed with the paint.